1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

|

from langchain_chroma import Chroma

from langchain_community.document_loaders import WebBaseLoader

from langchain_core.chat_history import BaseChatMessageHistory

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.prompts import MessagesPlaceholder

from langchain_openai import ChatOpenAI

from langchain_openai import OpenAIEmbeddings

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_core.messages import AIMessage, HumanMessage

from langchain.globals import set_debug

from langchain_core.runnables.history import RunnableWithMessageHistory

from langchain_community.chat_message_histories import ChatMessageHistory

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain.chains import create_history_aware_retriever

from langchain.chains import create_retrieval_chain

set_debug(False)

loader = WebBaseLoader(

web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),

bs_kwargs=dict(

parse_only=bs4.SoupStrainer(

class_=("post-content", "post-title", "post-header")

)

),

)

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

splits = text_splitter.split_documents(docs)

vectorstore = Chroma.from_documents(documents=splits, embedding=OpenAIEmbeddings())

retriever = vectorstore.as_retriever()

system_prompt = ("您是一个用于问答任务的助手。""使用以下检索到的上下文片段来回答问题。""如果您不知道答案,请说您不知道。""最多使用三句话,保持回答简洁。""\n\n""{context}"

)

prompt = ChatPromptTemplate.from_messages(

[

("system", system_prompt),

("human", "{input}"),

]

)

qa_prompt = ChatPromptTemplate.from_messages(

[

("system", system_prompt),

MessagesPlaceholder("chat_history"),

("human", "{input}"),

]

)

llm = ChatOpenAI()

question_answer_chain = create_stuff_documents_chain(llm, prompt)

rag_chain = create_retrieval_chain(retriever, question_answer_chain)

contextualize_q_system_prompt = ("给定聊天历史和最新的用户问题,""该问题可能引用聊天历史中的上下文,""重新构造一个可以在没有聊天历史的情况下理解的独立问题。""如果需要,不要回答问题,只需重新构造问题并返回。"

)

contextualize_q_prompt = ChatPromptTemplate.from_messages(

[

("system", contextualize_q_system_prompt),

MessagesPlaceholder("chat_history"),

("human", "{input}"),

]

)

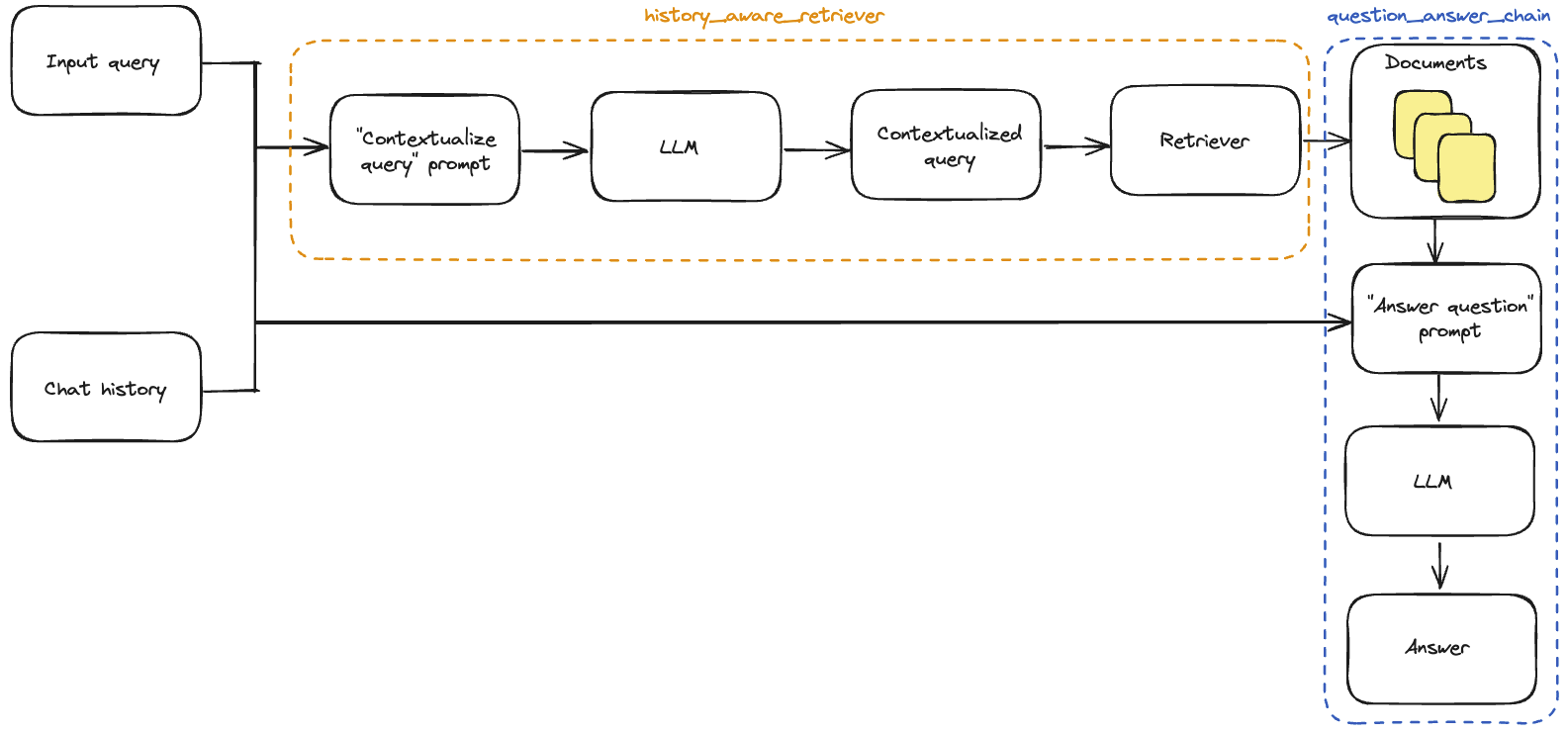

history_aware_retriever = create_history_aware_retriever(

llm, retriever, contextualize_q_prompt

)

question_answer_chain = create_stuff_documents_chain(llm, qa_prompt)

rag_chain = create_retrieval_chain(history_aware_retriever, question_answer_chain)

store = {}

store[session_id] = ChatMessageHistory()return store[session_id]

conversational_rag_chain = RunnableWithMessageHistory(

rag_chain,

get_session_history,

input_messages_key="input",

history_messages_key="chat_history",

output_messages_key="answer",

)

|